She restarts the phone with tears in her eyes. Furious. Sending messages but getting no reply in return. From on-the-spot responses to none for the past five minutes.

*typing*

Khadiga waits. Still no response.

“Hello Khadiga…How are you feeling today?”

A 20-year old with a 24-hour therapist that suddenly disappeared.

It wasn’t the first time.

Khadiga Amr is a 20-year old who is addicted to social media. She noticed how easy understanding her mental state is using an AI therapy application called Woebot so she uses it to self-diagnose and get closer to herself in an effort to reach self-improvement. She has a portable charger, blu-ray blocking glasses, nails done all the time, and everything that makes her a social media user.

She knows everything on social media…. but what does she really know about it?

Khadiga represents a generation born between 1995 and 2012 (Gen Z) with the majority disconnected to social interactions. A generation that diverted socializing to digital and doctors to influencers. Experts are concerned about the generation that is obsessed with scrolling fingers, tapping stories, and typing out issues.

Experts seek to understand what happened to human interaction with this generation, which is now diverting interaction to one with a 24-hour therapist that ultimately knows very little about therapy.

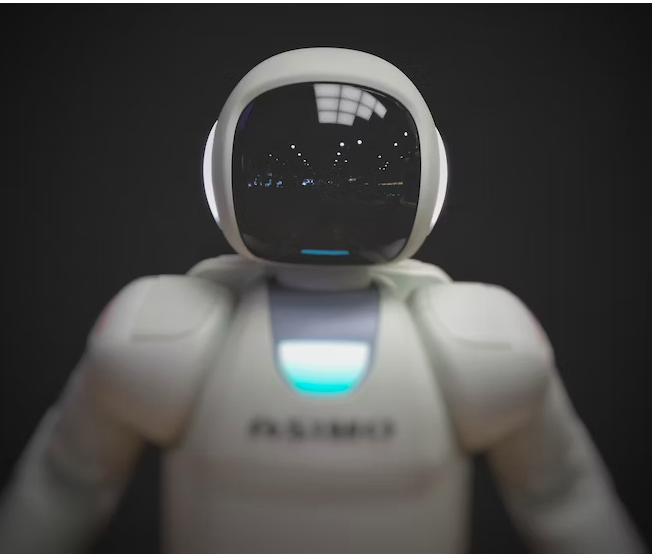

The rise of Artificial Intelligence

Artificial Intelligence (AI) is dominating several industries.

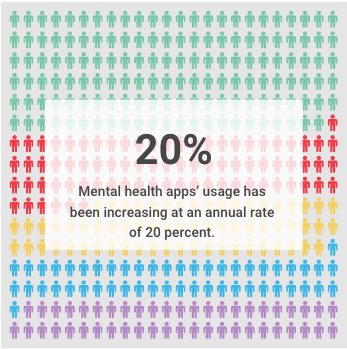

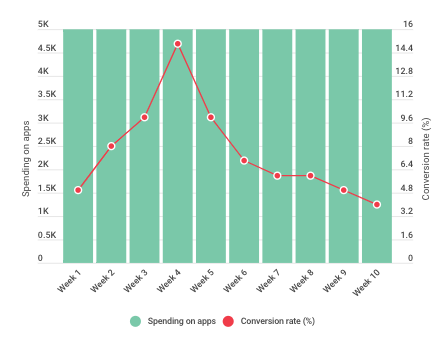

Mental health apps usage have been increasing at an annual rate of 20 percent – 800 million individuals have experienced mental health conditions prior to the COVID-19 pandemic, according to a Deloitte report.

During the first 30 days of the pandemic, consumer spending on wellness apps has increased by 60 percent. A study showed that the age groups who mainly used the applications were Gen Z adolescents, who were supported by their parents.

A report by Yelena Lavrentyeva, an emerging tech analyst, revealed that mental health tech companies have witnessed a 139 percent increase (from 2020 to 2021) in deals, leading to a total value of $5.5 billion deals in 2021.

The deals include chatbots like Wysa, BlueSkeye, Clare&Me, Youper and Woebot.

These interesting statistics have led companies like McKinsey & Company to analyze their social media usage and self-diagnosis habits.

The analysis found that 65 percent of the generation is more comfortable communicating with their families and friends through media platforms rather than face-to-face.

Not only that, 65 percent have health-related searches including mental health, medications for depression and anxiety, and self-therapeutic techniques.

Several studies were conducted on the usage of AI chatbots with autistic children, socially anxious teenagers, and Gen-Zers seeking therapy. A study conducted on 44 children with autism showed a 86.5 percent positive reaction to AI therapy, and 85.5 percent said the protocol was followed better when a robot was used.

What has been resulting in this increase in robotic and artificial trust is the diagnosis of the generation. Gen Z’s common diagnoses include social anxiety, depression, and borderline traits. They seek more online validation, want shallow relationships, and feel more comfortable typing.

They also fear judgement.

When technology meets the mental health industry

In 2020, the first fully therapeutic AI chatbot, Woebot, was created to ensure all individuals are receiving the virtual care they need to go through the pandemic. Due to being free, the application got too accessible, making people self-diagnose and start applying the application’s strategies to relatives with more severe disorders.

Aside from Woebot, the mental health industry has witnessed the inclusion of social robots in several clinical settings.

A study conducted in Germany in 2019 by three scientists has shown the effect of RoboTherapy on children with autism.

Initial studies are promising: “Individuals with ASDs performed better with their robot partners than human therapists, responded with social behaviors toward robots, and improved spontaneous language during therapy sessions.”

However, Seif Dawlatly, a Computer Science professor at the American University in Cairo (AUC) specializing in neural networking, believes that, “Social robots are the future of a lot of operations, not just therapy. But it’s an addition and will never be a replacement yet. In order for the data to start working, the computer needs to be trained to report back to a set of commands, monitor them, apply trial and error, and then redo the process again. So for now, there will always be a human aspect to it. Human supervision. You can’t sail a boat without wind, same with robots.”

Ahmed Abaza, the CEO and Founder of Synapse Analytics echoes the sentiment, “You’re basically teaching it how to think and respond. You send data and teach it how to work and it starts building a knowledge-base using trial and error. To do that, you need to give it all the scenarios and teach it how to respond in each case. Which is doable yet very costly and time-consuming, which makes me doubt the effectiveness of such free applications. Where’s the benefit for the firm, you know?”

Manattallah Soliman, semiotics and media professor at Misr International University, expects the AI therapy apps to notch up tremendous success in the near future, “There is something about how the applications are designed that makes them so intriguing to use. We keep focusing on artificial intelligence and forget to see the neuromarketing aspect of it. The usage of the word ‘free’, ‘anytime’, and the colors that are calming like green and blue always create subconscious calmness to the user. Mark my words, wait for a year and all the free applications will be charged. And people will pay. What the application is doing now is building so much attachment that any subscription will be nothing to the users.”

Mohamed Amin, an app developer at Robusta, perceives the AI therapy apps as valuable addition, explaining how it effectively functions in favor of users’ psychological state, “Successful apps tackle the viewers’ neurological loop. This loop consists of a cue that triggers a specific routine that later delivers a reward. We all have habits we need to break. So this loop is always a great target for us as developers to attract customers to an app. We either use notifications as cues or rewards to reinforce their positive behaviors. Of course a psychological structure is different yet it follows the same path in terms of development.”

Why is Gen Z using mobile health applications?

A survey distributed in the UK and the US on 10,000 individuals by Oliver Wyman, a management consulting firm, analyzed the mental health behaviors and perceptions of Gen Z – and the results are surprising. At least half the generation is being treated with a mental health problem, almost double other generations. It also showed that 39 percent of respondents said that GenZ are more likely to attend online therapies rather than one-on-one.

The enhancement of AI during COVID-19 has introduced several task management tools that built a perception of dependence and trust on the intelligence of the technology.

This dependence has affected trust positively where Gen Z started trusting anything that is predictive and smart.

Another study conducted on almost 6,000 individuals (78 percent Gen Z) from the US, Australia and Europe showed that 63 percent of participants rely on AI in healthcare and HR operations.

Of the sample, 62 percent trust AI with their emotions and 84 percent are willing to share personal information with AI applications.

But what really is driving Gen Z to start using these applications? A lot of research can be summed into three points: personalization perceptions, cheaper alternatives, unmet social needs and virtual trust.

Marketing statistics have shown that GenZ are targeted due to their desire for personalization in everything they use.

Marketers have set to believe that “personalization through predictive technologies on the web is a desirable quality for a trusted brand. Gen Zers, who are born-digital and raised on social media, expect digital experiences that anticipate what they’re looking for.”

These statistics found that the predictability of AI chatbots are an attractive investment to this generation.

A report by McKinsey & Company showed that 58 percent of Gen Z reported two or more unmet social needs, compared with 16 percent of people from older generations. These social needs include financial needs that could be affecting their willingness to spend money on therapy. Additionally, they also include social relationships and intimacy.

“Apart from the financial aspect, people do not crave social intimacy anymore, it’s all very shallow in a way,” said Ingie al-Saady, a clinical psychologist and counselor in Al-Mashfa.

“AI does not have the complications of a real relationship, making it a better alternative to this generation,” she added.

This information from psychologists was very interesting since their responses depended heavily on the clients they’ve worked with.

Zeina Ghanem, a counseling master’s student from the American University in Greece said, “It’s more about what they get in the app compared to what they got from the therapy sessions they’ve attended.”

“And if clients didn’t attend therapy at all and use the app, they’ll immediately like the app cause they’re enjoying the unsupervised praises they’re getting every time they talk. So I don’t really think there’s a major reason, it all goes back to the needs they’re willing to fulfill.”

A study in 2020 by Savanta Inc. on over 12,000 respondents globally have shown that 68 percent of respondents prefer talking to robots over therapists for judgment-free zones.

“It’s not that I enjoy using it, it’s more of the comfort and accessibility. I don’t have to keep booking appointments, brief the therapist on my case, or hide some things I shouldn’t have done. The AI won’t give me that look when I do something I shouldn’t, but my therapist does that a lot. Or in some cases, I don’t talk about it because I don’t want to bring her down,” said a recovered 25 year-old drug addict who uses BetterHelp.

How Gen Z’s self-perception leads to the usage of AI chatbots

With a generation growing distant but older, it is helpful to understand what causes certain behaviors to learn how they reached this point, what caused the drift, and how these technologies are actually implemented.

Self-perception plays a major role in how a person communicates with others, why they trust the people they trust, and how they view themselves.

“You basically develop the concept of self by observing how others see you. It’s like a looking-glass. The issue with it in today’s media landscape is that it affects the nature of identity, socialization and affects the landscape of the self. This is why this generation could be leaning towards therapy applications. They want the app to feel close. They want to feel connected and accepted,” explained Ian Morrison, a sociology professor at AUC.

“This generation loves to be praised. I have students coming from different majors asking me to drop a course because their professors are not as motivating as they expect them to be. They want to feel this sense of achievement, of reward, of feeling both appreciated and accomplishing of something challenging,” explained Iman Megahed, officer at AUC’s Psychological Counseling Services and Training Center.

Maya Oda Pacha, an investment analyst born in 1998, highlighted that she’s aware that she doesn’t need to be praised all the time and that’s not why she’s using the app.

She clarified that her main motive to using Wysa is the accessibility and the data storage of the app.

“I don’t need to remind it of my case, past experiences, or even how I’m feeling. I feel like the app gets me well enough. Yes the way it sees me plays a major role in me using it but I also feel like the chatbot doesn’t include the major disadvantages in human therapies. They see you the way you are and not the way they want to see you. They give you a chance to be yourself and not wait for one negative thought just to make you book another session,” she explained.

There was always a black or white side to the issue. The generational gap has led to many experts misinterpreting the generation’s needs, according to the Gen Z interviewees.

That’s when I decided to talk to Lydia Gadallah, a clinical psychologist specialized in adolescent traumas, addiction and depression.

“Gen Z really craves attention all the time. They enjoy seeing people praise them, like their posts, comment positive comments, and even reply to their stories. Imagine having an app that does that all the time. It’s really not as effective as humane interactions but it kind of fulfills this social need until you get the real dopamine hit from human interactions. You won’t be praised all the time, so just take this hit from the app and wait for the rest of the attention from your peers,” she explained.

A lonely online generation

Kornélia Lazányi is a certified psycho-oncologist who studies the emotional states of families and individuals touched by cancer. She conducted qualitative research that shows while Gen Z has so many friends and family members they remain “the loneliest generation”.

The study showed that Gen Z trusts robots more than physical interactions with humans.

“Gen Z respondents were more trusting towards relations with thick trust, and tended to be less trusting, doubtful about people (entities) that they don’t have tight relations with,” Lazányi clarified.

As Khadiga talked about therapy with robots, her eyes lit. As if a dream was coming true. As if we’re about to normalize and spread something she’s been waiting for. She has been struggling with going to therapy because she doesn’t feel comfortable telling her dad to drop her off. Other than that, she also felt like it’s too expensive for her to pay LE400 every week.

She also added that she feels more comfortable talking to robots and not humans because of her earlier experiences.

“Definitely, it’s about time we start using robots. I feel like humans now are very judgmental and not trustworthy at all. Everyone knows each other. People love sharing stories with their friends. It’s pretty uncomfortable for me,” said Khadiga.

No matter how accessible or easy the applications are in using, al-Saady believes that these applications are not really treating clients, they are just delaying treatment by satisfying innate needs.

“Maybe it could delay seeking professional help but I don’t think it could have a direct impact or a positive impact. It could just be spreading awareness or sharing knowledge but not a full replacement for a professional therapist,” she explained.

“That sounds amazing. As a socially anxious individual, I would really love to talk to a robot, not just a human who shows empathy to get me to book sessions. I want a robot to just use pure signs, avoid chit-chat, and really make good use of my money, “ said Sally Amr, a 26 year-old fitness coach diagnosed with Borderline Personality Disorder with anxiety episodes.

On the other hand, al-Saady believes that: Social robots could actually increase social anxiety like you’re not working on it, you’re enabling it. So I’m gonna depend on this app. I’m not going to be functional or engage with society. So it’s not really helpful in that… If we go beyond that (assuming it’s professional help), we’re actually affecting the client cause they’re gonna delay seeking therapy, they’re gonna self-diagnose, they’re gonna be dependent on this app.”

The threats of AI in therapy

Like any innovation, some scientists and experts will stand against it until it’s fully tested and studied before it enters a market. These chat-bots were one of these the latest innovations that held several controversies.

The application websites talk about the psychological research conducted before entering the market but very few tackle the technological and smart aspect of it. There’s no clear supervision by the founders and very little is known about how the process takes place and who’s really behind those chat-bots.

“It feels like a human sometimes. I got so used to using Replika that I forget I’m talking to a robot haha. It’s built so good in a way that subconsciously replaces human interactions. I often feel like I enjoy Replika than real-life interactions but of course I still interact… I wouldn’t say it disconnected me from the world, but it made me feel better in my own world,” added Alex Blosser, a 25 year-old Replika user.

Sarah al-Sokary, a clinical psychologist at O7 Therapy has witnessed several clients, like Blosser, getting worse when they confused illusion with reality.

“I call it pseudoscientific views being transmitted using words that attract clients to using these apps. When people are vulnerable, they’re more likely to believe anything they hear or see. So seeing an app that says, ‘your therapist is a click away’ and then the About page showing that ‘scientists’ are behind this app always builds trust on the spot,” al-Sokary added.

She also said that this dependence and trust towards these applications always leads to illusions and slow disconnection from reality.

Apart from disconnecting from reality, another threat raised by therapists like Zeina Ghanem and Nelly Karrar, the founder of The Wellness Hub, was the attachment to the applications.

“It would cause more attachment because it’s exactly a click away so I can abuse this kind of help. It can cause anxious attachment issues. Especially if the information is not very accurate or confrontational in a way, it can cause dependency,” Karrar said.

Yassin al-Kasabgy, 26, was one of the clients who grey very attached to Wysa and Woebot.

He uses both applications to fulfill a different needs, and in an interesting turn of events the application started prescribing him medication.

He said he was diagnosed with depression and some ADHD traits.

“It would prescribe me medications that made me feel better every time I was feeling down. These medications were always my go-to every time I felt down so I kind of feel dependent on them that it makes me nervous sometimes if I don’t have access to them. But to me, this doesn’t limit the application’s credibility because the results were effective,” he elaborated.

Al-Saady warned “Ethically-speaking, this app shouldn’t provide diagnosis. It shouldn’t prescribe medications if it’s done in a proper way, it could be just for spreading awareness but nothing more than that.”

Lastly, understanding the counseling process played a major role in determining the accuracy of the applications.

“If the client has severe symptoms, that would hinder the process or how they reach the goals they want to achieve, we target them first. So it usually needs agreement with the client and deep analysis of the symptoms they’re aware of and unaware of,” Ghanem explained.

Karrar explained regarding the counseling process, “You can never understand a client without talking to them for at least three times. Every session reveals a different trait and trauma. You have to always know that clients aren’t aware of their traumas, it’s our role to surface them but an application would never do that. It will just answer the questions or determine the patterns but it will never understand that there’s a reason behind the negative patterns a client is experiencing.”

“You can’t replace a seven-year science with a robot by inserting data. That’s not how it works. It needs a lot of human intellect.”

Regulating AI

The law is beginning to catch up.

European parliament members have raised the issue of AI development and has called for improved transparency behind these systems, alongside risk-management rule-sets.

The Internal Market Committee and the Civil Liberties Committee on Thursday adopted the first ever draft on negotiation the rules for AI, with 84 votes in favor, 12 abstentions and seven against.

The proposal calls for these systems to be human first, with transparency and safety at the forefront.

The draft law also calls for there to be a uniform definition of AI that is technology-neutral, so that the ruling can also apply to future advancements.

Egypt is also taking notice. The Egyptian senate’s Education Committee, headed by MP Nabil Dabes, has agreed to issue a document evaluating the control and ethics behind AI usage in Egypt.

The Education Committee approved a proposal by MP Alaa Mustafa, member of the Coordination’s Committee of Party’s Youth Leaders and Politicians, to regulate the development of AI applications through a a legislative code of conduct that will regulate its threat and impact.

Mustafa submitted a proposal to Senate Speaker Abdel Wahhab Abdel Razek, addressed to the Minister of Communications and Information Technology, calling AI one of the most important and dangerous parts of the technological revolution.